Converting a website to an ebook

I recently ran through the ups and downs of taking a very well written blog and converting it to an EPUB ebook in order to read it on my Kindle.

Using a few command line applications I was able to produce a rather well formatted EPUB file that I could send to my Kindle using Amazon's Email to Kindle service.

The general principal can be applied to almost any website that renders using HTML on the server side (throws shade at SPAs), so blogs are often perfect for this, or Wikipedia articles, whatever suits your fancy.

Step 1 - Collect your URLs§

Start by collecting all of the URLs that you want into a single file, with each URL separated by a newline. I called my file urls (original I know).

Example of urls file:

https://austindw.com/harden-openwrt/

https://austindw.com/precompressed-assets-caddy/

Step 2 - Create the book§

Wow, two steps and we're done? Kinda.

I wrote up a script to do the rest of the steps in one fell swoop.

It requires a few tools be installed, specifically:

readability-cli- Homepage

- For Arch Linux users, this package is provided in the AUR, so a simple

yay -Sa readability-clishould be all you need.

pandoc- The macdaddy of document processors as far as I can tell. It's available on most Linux distro's in the package manager.

- For Arch Linux users, a simple

pacman -S pandocwill do the trick.

Now that we have the required dependencies, let's take a look at the script:

#!/bin/sh

set -eu

# Point this at whatever file your URLs are stored in.

urls="urls"

# Make the directory where we'll store the clean HTML for each post.

mkdir -p posts

# Iterate over the URLs, download them and clean them up with the readability-cli

# We use a count here to ensure that we organize the output posts in the same order that they are specified

# in the input file. This is helpful as you can lay out the full order of your book by just editing the URLs file.

count=1

cat "$urls" | while read url

do

output=$(printf "posts/%03d.html" $count)

readable -q --low-confidence force "$url" -o "$output" 2>&1 > /dev/null

count=$((count+1))

done

# Take all of the posts and put them into a book.

pandoc -o TheBook.epub posts/*.html

Save, make executable (chmod +x script.sh), and run the script and you'll have a fresh new EPUB named TheBook.epub at the end of it.

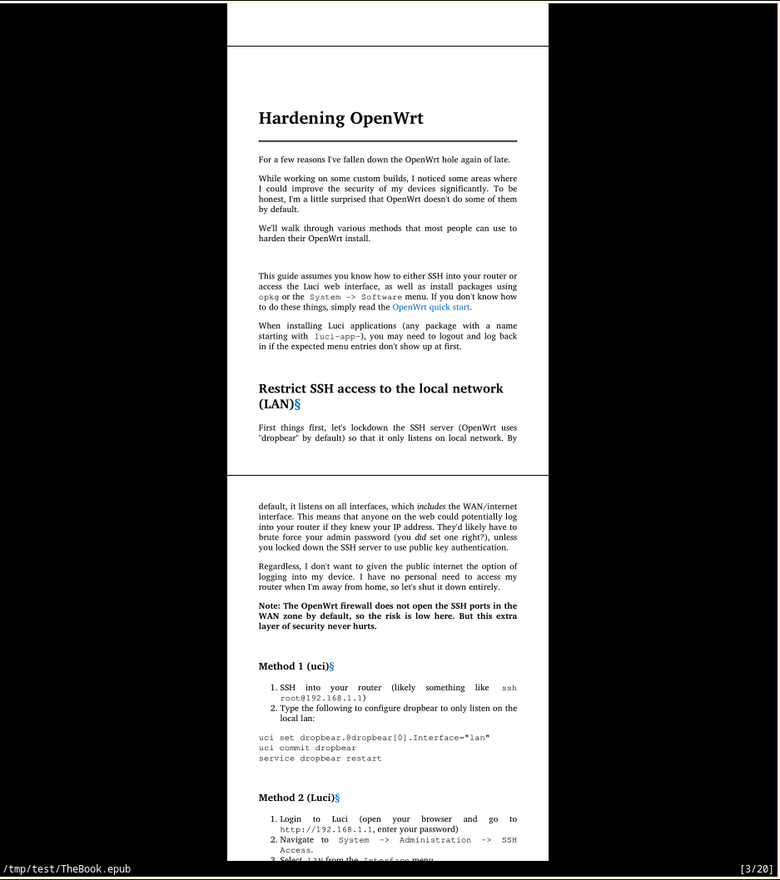

Open that up in your preferred ebook reader and you'll see a nicely formatted output like the following:

Obviously, you should edit the script to your needs. This post just acts as a guide, demonstrating the power of the readability-cli and pandoc.

Note: I used the --low-confidence force option when executing the readable command as I noticed a few posts that failed to extract with the default keep setting. Using force here worked just fine for my use case. Obviously, this may or may not work for your use case, so experiment.